I get this question all the time. More often, it's phrased as a statement. Every once in a while, it's an in-you-face assertion. I could be referring to my halitosis, but not this time. I am talking about converting data from an RGB sensor of some sort into color measurements.

The question/assertion has come in many forms:

- I have this iPhone app that is sooooo cool! It gives me a paint formula to take to the hardware store!

- How can I convert the RGB values from my desktop scanner into CIELAB?

- I just put this Magic Color Measurer Device on the fabric, and it tells me the color so I can design bedroom colors around my client's favorite pajama.

- All I need is this RGB camera on the printing press to adjust color.

My quick response - the results will be disappointing.

What color is Jennifer Aniston's forehead?

I used Google images to find pictures of Jennifer Aniston. I selected six, as shown on the left side of the image below. I then zoomed in and selected one pixel indicative of the color of her forehead. The color of those six pixels is shown in the rectangles on the right.

What color is Jennifer's forehead?

This illustrates a few things. First, it shows that pictures of an attractive woman can get people to look at a blog. I have just started writing the blog, and already two people have looked at this blog! Second, it shows that our eye can be pretty good at ignoring glaring differences in color. Sometimes. At least on the left. On the right, those same glaring differences are, well, glaring.

But, for the purposes of this blog, this little exercise illustrates the variety of color measurements that a camera could make of the same object.

We could just write this off as the problem with cheap cameras, but let's face it. If you were going to get close enough to Jennifer Aniston to be able to catch a glam shot of her, wouldn't you go out and get the most expensive camera that you could afford? Especially if you were going to go to all the trouble of getting that image on the internet??!?! I think we can pretty well expect that the cameras used for these shots were top of the line.

Lighting has a big effect on the color, but the spectral response of the camera is also an issue. As we shall see...

The Experiment

Here is the experiment I performed. I made a lovely pattern of oil pastel marks on a piece of paper. I used the eleven colors that everyone can agree on: brown, pink, gray, black, white, purple, blue, green, yellow, orange, red.

I then taped that paper to my computer monitor and made a replica of this pattern on the screen. I adjusted the lighting in the room and the colors of each patch on the monitor so that, to my eye, the patches came pretty close to matching.

The equipment in my experiment

Then I got out my camera. The image below is an unretouched photo.

I don't know what you see on your own computer monitor, but I see some colors that are just blatantly different. While my eye said the two pinks were very close, the camera said that the one on the left is darker. The gray pastel is definitely not gray... it's a light brown. The white on the paper is more of a peach color. And the purple? OMG... They certainly don't match. Actually, the photo of the one on the paper looks closer to what my eye saw.

On the other hand, the blacks match, and the blues, green, and reds are all good.

In some cases, the camera saw what I saw. In other cases, it did not.

Maybe I just don't have a good enough camera? My camera is not "top of the line", by the way but it's decent - it's a Canon G10. I tried this same thing with the camera in my Samsung cellphone and my wife's iPhone. Similar results.

Note to would be developer of RGB to CIELAB transforms: The pairs of colors above must map to the same CIELAB values, since they looked the same to me. Your software must be able to map different sets of RGB values to the same CIELAB values. "Many to one."

I haven't demonstrated this, but the reverse is also true. "One to many." Your magic software must be able to take one RGB value and map it sometimes to one CIELAB value, and sometimes to another. How will it know which one to convert to? Whichever one is correct.

In other words, IT CAN'T WORK! No amount of neural networking with seventh degree polynomial look up tables can get around the fact that the CIELAB information isn't there. The software has no information to help it decide cuz there are many CIELAB values that could result in that one RGB value.

What went wrong?

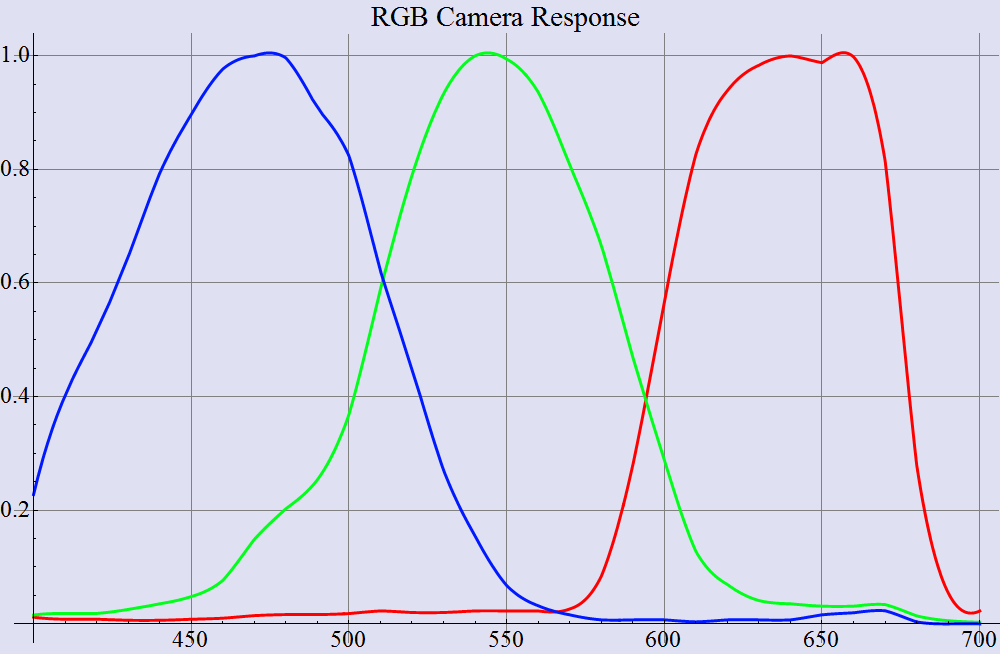

I submit exhibit A below, a graph that shows the spectral response of a typical RGB camera. (This one is not the response of my G10 - it is from some other camera.)

The consequence of this difference is that an RGB camera - or any other RGB sensor - sees color in a fundamentally different way than our eyes do. They don't all have the same spectral response as that of the camera above, but none of them look much like the response of the human eye.

I never metamer I didn't like

The word "metamer" comes to mind. Metamer, by the way, is the password for all meetings of the American Confabulation of Color Eggheads Lacking Social Skills. Memorize that word, and you can get into any meetings. You'll thank me later.

Two objects are metamers of one another if their colors match under one light, but not under another. Metamerism is a constant issue in the print industry since color matches of CMYK inks to real world objects will almost always be metameric. Print will never match the color of real objects. The fancy underthings in the Victoria's Secret catalog are guaranteed to look different when my wife models them at home.

The following pictures might well help to make metamerism as confusing as possible. I have pasted a GATF/RHEM indicator on a part of a CMYK test target. The first image below is similar to what I see when I view this in the incandescent light in my dining room. The RHEM patch that has the words "IGHT NOT" in it is a bit darker than its friend to the right, and the two patches above are almost kinda the same color.

Now we start getting confusing. The camera didn't snap this picture under incandescent light. This picture was illuminated with natural daylight.

On the other hand, the blacks match, and the blues, green, and reds are all good.

In some cases, the camera saw what I saw. In other cases, it did not.

Maybe I just don't have a good enough camera? My camera is not "top of the line", by the way but it's decent - it's a Canon G10. I tried this same thing with the camera in my Samsung cellphone and my wife's iPhone. Similar results.

Note to would be developer of RGB to CIELAB transforms: The pairs of colors above must map to the same CIELAB values, since they looked the same to me. Your software must be able to map different sets of RGB values to the same CIELAB values. "Many to one."

I haven't demonstrated this, but the reverse is also true. "One to many." Your magic software must be able to take one RGB value and map it sometimes to one CIELAB value, and sometimes to another. How will it know which one to convert to? Whichever one is correct.

In other words, IT CAN'T WORK! No amount of neural networking with seventh degree polynomial look up tables can get around the fact that the CIELAB information isn't there. The software has no information to help it decide cuz there are many CIELAB values that could result in that one RGB value.

What went wrong?

I submit exhibit A below, a graph that shows the spectral response of a typical RGB camera. (This one is not the response of my G10 - it is from some other camera.)

Spectral response of one RGB camera

For comparison, I show a second graph, which is the spectral response of the human eye.

Spectral response of the human eye

There are some very distinct differences. The most obvious is that the red channel in the eye is shifted considerably to the left. There is an astonishing amount of overlap between the red and green channels. The green channel of the eye has been approximated closely by the camera, but the blue channel on the camera is much too broad.

(I should point out that real color scientists don't even call these "red, green, and blue". Because the response of the eye is sooooo unlike red, green, and blue, they are called "L", "M", and "S", for long, medium and short wavelength.)

The consequence of this difference is that an RGB camera - or any other RGB sensor - sees color in a fundamentally different way than our eyes do. They don't all have the same spectral response as that of the camera above, but none of them look much like the response of the human eye.

I never metamer I didn't like

The word "metamer" comes to mind. Metamer, by the way, is the password for all meetings of the American Confabulation of Color Eggheads Lacking Social Skills. Memorize that word, and you can get into any meetings. You'll thank me later.

Two objects are metamers of one another if their colors match under one light, but not under another. Metamerism is a constant issue in the print industry since color matches of CMYK inks to real world objects will almost always be metameric. Print will never match the color of real objects. The fancy underthings in the Victoria's Secret catalog are guaranteed to look different when my wife models them at home.

The following pictures might well help to make metamerism as confusing as possible. I have pasted a GATF/RHEM indicator on a part of a CMYK test target. The first image below is similar to what I see when I view this in the incandescent light in my dining room. The RHEM patch that has the words "IGHT NOT" in it is a bit darker than its friend to the right, and the two patches above are almost kinda the same color.

Now we start getting confusing. The camera didn't snap this picture under incandescent light. This picture was illuminated with natural daylight.

Photographed under daylight

Ok, maybe that's not confusing yet. But let's move the studio into my kitchen where I have halogen lights. Note that the whole image has shifted redder, but the relationships among the colors are similar to the daylight picture.

Or are they? Take a look at the RHEM patch and compare it with the CMYK patch directly above it. Previously, they were kind of the same hue. No longer. And the other two patches (RHEM and the one above it) have gotten closer in color.

Photographed under halogen light

Alright... still not real confusing. Let's try under some other light source. This one will blow your mind.

Next, I photographed that same thing under the fluorescent light in my laundry room. The striking thing is that the stripes on the RHEM patch are completely gone as far as the camera can tell. This is in contrast to what my eyes see. My eyes tell me that the stripes in the RHEM patch have reversed. To my eye, the darker stripes are now lighter than the others.

Big point here - for color transform software to work, it has to take the measurements from the adjacent RHEM patches below (which are nearly identical) and map them to CIELAB values that are very different.

Photographed under one set of fluorescent bulbs

Finally, I pulled out a white LED bulb, and tried again. Here again, the stripes are gone as far as the camera is concerned, but I can see the stripes. If I compare the image under the white LED versus under the fluorescent, it can be seen that the white LEDs bring out the purple when compared against the previous. The RHEM patch looks above is almost brown in comparison.

Photographed under white LED lighting

In summary, the camera does not see colors the same way that we do.

I spoke in rather black and white terms as the very beginning, saying that getting CIELAB out of RGB just plain won't work. Maybe I am just being pedantic? Maybe I am just bellyaching cuz it gets lonely in my ivory tower?

Lemme just say this... You know the photo shoot where they took the picture of the Victoria's Secret model? That wasn't done in my ivory tower, and it wasn't done with my Canon G10. The real photographers would laugh at my little camera. Their camera cost about twice my annual salary. And guess what? Every single photo from the photo shoot went into Photoshop for a human to perform color correction because their expensive camera doesn't see color the same way as the eye.

Quantifying the issue

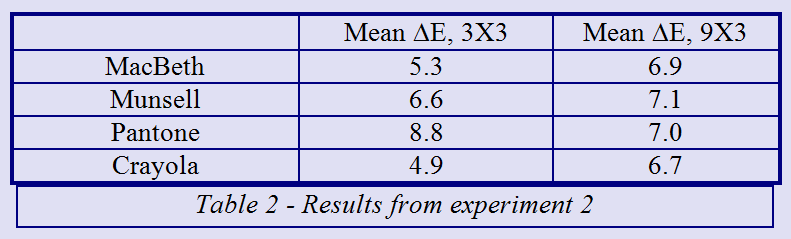

In a 1997 paper, I used the spectral response of a real RGB camera, and the spectra of a zillion different real world objects to perform a test of a color transform, RGB to CIELAB. I calibrated the transform using one set of spectra of printed CMYK colors. As can be seen, if I used a 9X3 matrix transform, I could get color errors of between 1.0 ΔE and 2.0 ΔE when I transformed other CMYK sets. This is not quite as good as some purveyors of RGB transforms claim, but it's still usable for some applications.

But this was all done with CMYK printing ink on glossy paper. What happens if we use that same transform to go from RGB to CIELAB for something other than printing ink? Table 2 shows that all heck breaks loose. If I try to transform RGB values from the MacBeth color checker, a set of patches from the Munsell color atlas, a collection of Pantone inks, or a set of crayons, the average color error is now up around 7.0 ΔE. I don't think this is usable for any application.

Ok, that's lousy, but hold onto your hats sports fans! I tried this same 9X3 transform on a hypothetical set of LEDS, simulating what the camera would see when pointed at those LEDs one at a time, and I used the magic transform to compute the CIELAB values. The worst of the color errors was kinda big. Well, quite big, actually. Hmmm... maybe even "large". Or, perhaps more accurately, one might call the color error ginormously humongomegahorribligigantiferous. 161 ΔE. That not 1.61. That one hundred and sixty one delta E of color error introduced by using this marvelous color transform. This is called over-fitting your data.

I don't know if this has been surpassed in the past 17 years since I wrote the paper, but at the time, this was the largest color error ever reported in a technical paper.

Conclusion

I have focused on just one aspect of getting a color measurement right, that of having the proper spectral response. Don't start with RGB.

But if you still fancy building an RGB color sensor or writing an iPhone app, let me forewarn you. There are numerous other challenges. Enough to keep me blogging for pretty much the rest of the year. There's measurement geometry (lighting angle, measurement angle, aperture size- viewing and illuminating), stability of photometric zero and illumination, quantum noise floor, fluorescence, backing material -- these topics all come to mind. Once you move beyond the notion that RGB will work for you, then you gotta get these under control.

TANSTAFL - There Ain't No Such Thing As a Free Lunch. If it were easy to build an accurate color measurement device with a web cam, then the expensive spectrophotometers from XRite, and Konica-Minotla, and Techkon, and DataColor and Barbieri would all be obsolete.

Further reading

Seymour, John, Why do color transforms work?, Proc. SPIE Vol. 3018, p. 156-164, 1997

Seymour, John, Capabilities and limitations of color measurement with an RGB Camera, PIA/GATF Color Management Conference, 2008

Seymour, John, Color measurement with an RGB camera, TAGA Proceedings 2009

Seymour, John, Color measurement on a flexo press with an RGB camera, Flexo Magazine, Feb. 2009

Great article John, as always - there is so much good stuff here.

ReplyDeleteOne thing from near the beginning, I think that when properly configured, and RGB camera can do a credible job of printer control. "Properly configured" means that the camera characterization understands the (presumably 1:1) mapping of CMY to color. Then it is "just" a question of mapping camera RGBs to printer CMYs. Not trivial, but possible, and the delta E's should stay well below 100.

Also, for anyone who want to delve further into use of three sensors to predict arbitrary color, ask The Google about the "Luther Condition."

- DW

Thanks Dave.

ReplyDeleteI am going to disagree on your comment about doing a "credible job".

Once upon a time, there was a color control system for printing presses that used an RGB camera. It did a fairly decent job at measuring density, but when ISO 12647 started pushing people toward measuring CIELAB, I undertook a one-year project to try to squeeze accurate CIELAB out of an RGB camera.

I had the benefit of ten man-years of development of a solid RGB photometer (not a simple task), and I had the benefit of two other smart guys who each put about six months of effort into this specific goal. Based on this, I claim that I have met Niels Bohr's criteria for being an expert in the art of RGB to CIELAB transforms. ("An expert is someone who has made all the mistakes possible in his narrow field.")

The end result is that 12 man years of effort says "it won't be good enough". We spent ~$1M on developing a true spectro.

The problems are that a) while the collection of all CMY spectra is theoretically three dimensional, it's not linear, b) the addition of K makes it at least four dimensional, c) dot gain is not linear, so a change in dot gain introduces a bunch more spectra, d) gloss of the paper will in effect add "white" as another dimension, and e) the color of the paper adds yet another dimension. Of... and then there's spot colors!

ISO 13655 defines that a spectro for measuring the color of print must have at least 15 channels.

That said... I determined (in later work) that within a press run, the deviation of color can be characterized into delta L, a, and b by an RGB camera to within a reasonable tolerance. That means that if there is some other mechanism for bringing the color up during makeready, then the RGB camera can monitor color from there. But, the big savings for a printer is during makeready - saving waste by getting to color ok quickly.

I think for my purposes of making wall art that matches decor in the room, I can compare Munsell values on the smartphone and come up with a pretty decent result in a mixed paint at Lowes.

ReplyDeleteThe challenges of measuring color are many, and I won't live long enough to solve them all. I studied art with Henry Hensche, even made a movie about him that's on YouTube. His view of art history is that we are now at a time, based on Claude Monet's work; showing the same scene in different times of day and weather conditions, that we MUST be aware of the "time of day" to really communicate the truth of what we're looking at. Colors are affected by that, no doubt.

Your experiments really show how lighting affects the color.

Our human desire to do things "perfectly" seems out of place when all we're looking to do is produce a wall art that fits a certain space and decor.

Have you seen on the Munsell color blog the lady that incorporates Munsell values into her designing of carpets and rugs? She found a guy in India I think that has swatches it many colors, but nowhere near the many possibilities that Munsell designs.

Keep up the good work. If we live forever we can solve these problems, but until Jehovah God's Kingdom comes, we'll have to just do our best. For more information about God's Kingdom, check out jw.org.