When I started in the printing industry, we didn't have all those fancy colors for ink. All we had was white. Decorative packaging was white, but that was ok cuz all we had to sell was rocks. Books were printed with white ink, which, at the time, was good enough. No one knew how to read. Hadn't been invented yet.

And then someone had to go and invent white paper. We were screwed and we knew it. Our response? There were, of course, the white paper deniers who eventually invented red ink. Those of us who survived the great "white ink is dead" upheaval innovated, and this, is how black ink was invented.

White ink is dead? Now what are we gonna do!?!?

As I recall, we were burning the midnight oil trying to find innovations that would eclipse the disruptive technology of white paper. Some were working on creating whiter ink. Some worked on ways to package rocks that didn't require packaging. By the way, I don't think anyone ever made money on that one. They found it hard to sell a packaging material that wasn't there.

But someone had their midnight oil burning mechanism sitting too close to the window. The next morning, that same someone noticed a big smudge of serendipity on the window. "Eureka! We have discovered black ink! Let's go buy a million lanterns and a million windows so we can go into production!"

You know the rest of the story. Gutenberg and all that. But what you probably didn't know that TAGA -- the Technical Association of the Graphic Arts -- was there the whole way, providing a forum for the technical leaders of the time to discuss the future of printing, the thixotropic properties of carbon black, and modern techniques of rock packaging.

At the time, black ink was a solution looking for a problem to solve. Very few people know this, but it was at TAGA that the people who were working to find the most efficient mean for putting black ink on paper met the people who were looking for a way to record the spoken word. TAGA provided the spark that created the written word.

The 2014 TAGA conference was a continuation of this ancient tradition. Here are some highlights. I make no apologies for all the cool stuff that I missed because I was hung over.

The 2014 TAGA conference was a continuation of this ancient tradition. Here are some highlights. I make no apologies for all the cool stuff that I missed because I was hung over.

Daniel Dejan of Sappi Paper gave one of the keynotes. Brain scans done of people reading tablets and people reading physical paper

are actually different. People are more likely to read a book linearly, and

read a tablet with "skim/click/jump around". Studies have shown that people tend

to trust stuff committed to paper more than stuff on the web, and also have

better retention for stuff on paper. (Please print this blog post out before reading.) These are reasons to utilize print.

How can you target advertising for all these avenues?

(from Dejan's talk)

(from Dejan's talk)

Ian Hole of Esko gave another key note. There have been

some way cool packaging concepts recently. Bombay Sapphire gin used an

electroluminescent ink to make the bottle light up in blue. Absolut vodka used

a blue thermochroic ink to indicate the vodka was chilled to the proper

temperature. Coke has successfully marketed cans with absolutely no words on

the can. Smart wine corks can track the temperatures that a bottle has gone

through. Smart packaging of medicines can record when a patient is actually taking a

medicine. Pringles delivered their potato chip tubes with the logo “these are

not tennis balls”. Where? At Wimbledon.

Show stopper from Ian Hole's presentation

Tim Claypole of Swansea

University gave yet another of the keynotes. 98% of printed electronics is done with screen printing. Flexo is

another technology that could work, but solvents are an issue. The only

application of printed electronics that has been successful (from a business

standpoint) to date is the printing of blood glucose sensors. This has been

very successful. One university group created a 3D printer for chocolates. Look

to the Korean Olympics for applications of large area printed displays.

As if three keynotes were not enough, Paul Cousineau and Mark Bohan collaborated to give the fourth keynote. The most important thing I learned is that wide format printing on shrink wrap is being used to make customized coffins.

A brilliant scientist with a huge ego gave a talk about intra-instrument agreement between spectrophotometers. His message is that standardizing one spectro to match another is a tricky business. Due to actual physical differences in the

spectros, standardization can make inter-instrument agreement worse. Look for this self-important dude to give a blog summary of the paper soon.

Tony Stanton (Carnegie Mellon) looked

at color uniformity of various types of presses. Variability for ink jet: 0.2

ΔE00, for litho: 0.5 ΔE00, for electrophotographic: 1.0

ΔE00.

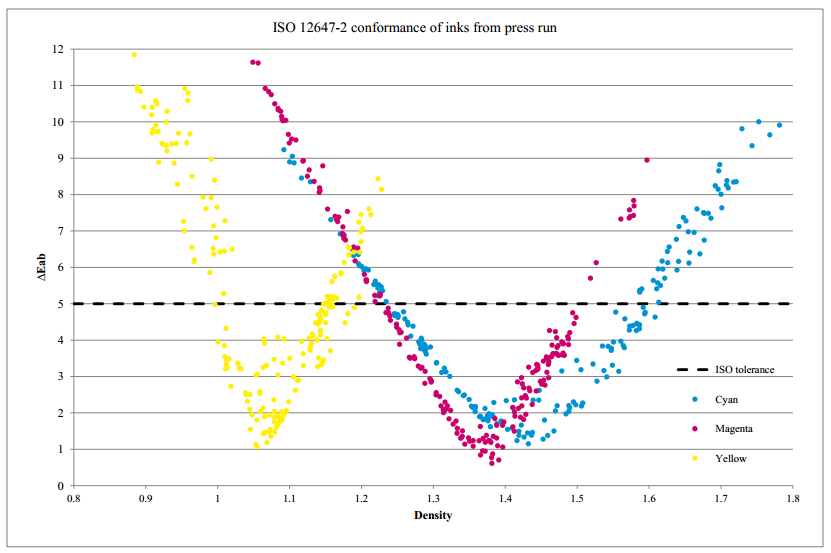

Soren Jensen (Danish School of

Media and Journalism) spoke on an approach to meet the color targets of ISO 12647-2. This standard has target L*a*b* values for CMY solids and

tolerances that they must be within. It also has target values for the RGB

overprints, but does not have tolerances for these. Soren worked out a means

for determining the densities that are a) within tolerance for CMY, and b) that

minimize the overall error in hitting the RGB targets. He's my buddy cuz he used Beer's law.

Perifarbe plots from Soren's presentation

Bruce Leigh-Myers (RIT) looked at

inter-instrument agreement of M1 spectros measuring paper with fluorescent

whitening agents. One instrument in his corral was way different.

Ragy Isaac (Goss) gave a tutorial

on statistical methods, driving home the point that you shouldn't make

important decisions without doing a little bit of statistics. His talk involved paper helicopters.

Xiaoyin Rong (Cal Poly) discussed screen printing of electronics. Cell

phone touch screens are a potential application for printed electronics. To do

this, you want a tiny silver grid laid down on glass. The trace width should be

around 2 µm. Current limitations are about 30 µm for gravure and flexo, and 40

µm for screen printing. She looked at using a solid mesh screen as opposed to a

woven wire screen. She is still working on this.

Gary Field (Cal Poly)

investigated the difference between printing in the order KCMY versus CMYK.

Previous studies would run in one order, bring the press down and swap plates,

and then run again. The reliability of this sort of test is questionable. Was the

change due to changing conditions on the press, or due to the change in the

print order? He got around this limitation by running on a five color press with K in the

first and last positions. The conclusion is that CMYK produces a bigger gamut.

The Dmax is higher, the print is glossier, and the four color solid is closer

to neutral.

Martin Habekost (Ryerson

University) looked at the print quality (resolution) that is produced by

various RIP software. Surprisingly (or maybe not) all RIPS are not created

equal. Some RIPs produce plates that result in higher resolution on the printed

page.

Doug Bousfield (University of

Maine) looked at the tack force that ink applies to paper. One of his comments

was that a tack force meter might not be really measuring what we think it’s

measuring. Heresy. Pure heresy.

Michael Carlisle (ArjoWiggins creative papers) spoke on the development of a special paper for electronic printing. They typically print on plastic substrates, but this needs to be laminated to paper. This special paper is especially smooth, which is what is needed to make those 5 micron traces.

Really cool SEM images from Michael's presentation

Sasha Pekarovicova (Western Michigan University) talked about the use of soybean oil to take the ink out of recycled paper. Bet you didn't stop to think about where the ink goes when you put the newspaper into the recycle bin!

Joerg Daehnhardt (Heidelberg)

spoke about fanout on extra-wide sheet-fed presses. The cause is different from

the fanout that we all know and love on web presses, but the results are the

same. It is caused by the grippers pulling laterally, and uneven inking putting strange elasticity on the paper. They

use a system of cameras to determine the misregister and actually stretch the

plate to compensate.

Don Duncan (Wikoff Color)

gave a talk on migration of icky stuff from the printing process into the stuff

inside the packaging. This is a hot political issue and Nestlé’s and Mueslix

have found it can be a big source of bad PR. Nestlé’s developed a list of naughty

chemicals that they don’t want to be used in the print process. The Swiss

government rubber stamped this, and this list is on its way to becoming an EU

ruling. The list is perhaps a bit stringent, maybe even draconian. It is also a

bit of an issue since Nestlé’s requires that fluorescent inks be used on the

packaging of certain candies, and all fluorescent pigments are on Nestlé’s list

of forbidden chemicals.

Mandy Wu (Appalachian State University) chronicled a project at her university for the students to offer commercial printing to businesses in the community. They made a point of using green technology.

Thomas Klein (Esko) gave a very

informative presentation about high definition flexo plates. The normal flexo

plate has flat topped cells. This causes problems with highlights, since you

can’t reliably print below a 12% dot, and also with solids because the solids

get mottled. You can deal with the solids by creating a cell that has

microcells. This evens out the laydown and gives a big boost in density. At the

highlight end, the issue is dealt with by making cells that are rounded at the

top.

Nir Mosenson (HP) spoke on an

electrophotographic printer, in particular how they managed to scale up their

original system to one twice as wide. He provided a very clear description of

how this sort of printer works. One thing I found interesting was the use of a

rotating set of mirrors mounted together as a hexagonal prism being used to

guide the laser beam to charge the cylinder.

Kirk Szymanski (Ricoh) described the process by which one assures that a toner-based printer is made consistent in color. Inline measurement of color is important. Even for a printer like this with very quick startup (comapred with web offset) there is a small warm-up time. Typically 7 to 10 sheets are required to provide stable printing. The lasers need to be linearized across the sheet. There are inherent differences in color between web offset (and ISO 12647-2) that must be calibrated. Density is a good start, but tweaking must be done in CIELAB.

Stephen Lapin (PCT Electron Beam)

compared electron beam (EB) curing to conventional ink drying. In heatset web

offset, the ink is “dried” by flashing off the oils through the use of heat. In

UV cured flexo, a photoinitiator is added to the ink. This photoinitiator is a

good absorber of UV, so it readily captures the energy from the UV lights. EB

curing relies on the fact that all matter will absorb energy from an electron

beam. The higher the specific gravity (I hate to say “density”), the more

absorption of the energy. EB curing uses a special ink that polymerizes when

the energy is absorbed; there are no oils to flash off.

Tim Claypole (Swansea) presented

a service that they have started. Newspaper printers will contract with Swansea

to rate them. The printer prints a test target, which is measured and graded at

Swansea. This service differs from other software packages in that it is an

independent evaluation, and in that it compares one printer against another.

Sasha Pekarovicova (Western Michigan University) gave a talk about their experiences in creating a printed electronics capacitor where pthallocyanine ink (commonly called cyan) is used as the dielectric. The pinholing effect was problematic. (If I understand the issue correctly, this limits the breakdown voltage of the capacitor.)

Bruce Leigh-Myers (RIT) described

a G7 calibration that was done on a gravure press by his colleague Bob Chung. Typically, a G7 calibration

takes a few iterations to dial the press in. On gravure, this is very

costly, since it costs and arm and a leg and two tickets to Disneyland to engrave the cylinders. The idea is

to do the first press run virtually. The first run is performed by attaching a

gravure ICC profile to the P2P target file and reading the values out of the digital image. Simple enough, and it did work. Only one set of cylinders needed to be

engraved.

Udi Arieli (EFI) gave an enlightening talk (with many cute videos) about his theory of global optimization. This is the idea that a printing process should not be thought of as strictly a printing process in order to make for efficiency. Concentrating on optimizing just one process unit can hurt the efficiency of another. I have known Udi for years, but had never heard him speak until this conference. I had no idea he was such a smart guy.

I know I missed some good talks, but I was either hung over (as I said), forgot my pen, was signing autographs for a student, or the topic was way over my head. For lack of time, I have omitted reviews of all the student's journals - which I thought were fabulous this year!

Oh... I almost forgot one thing. I am now the VP of papers. That means - talk to me if you want a spot in next year's conference.