I checked out the yellow pages to find a yellow cab to take me down the yellow brick road to the yellow submarine so I could get a can of mellow yellow. So many yellows! Are they all the same?

I have used the word metamerism in three previous blogposts: RGB into Lab, Scribbling away that scratch on my car, and Is your green the same as my green. But, I really haven't explained why there is this thing called metamerism. Why is it that a pair of colors may match under one light, but not under another?

Example 1

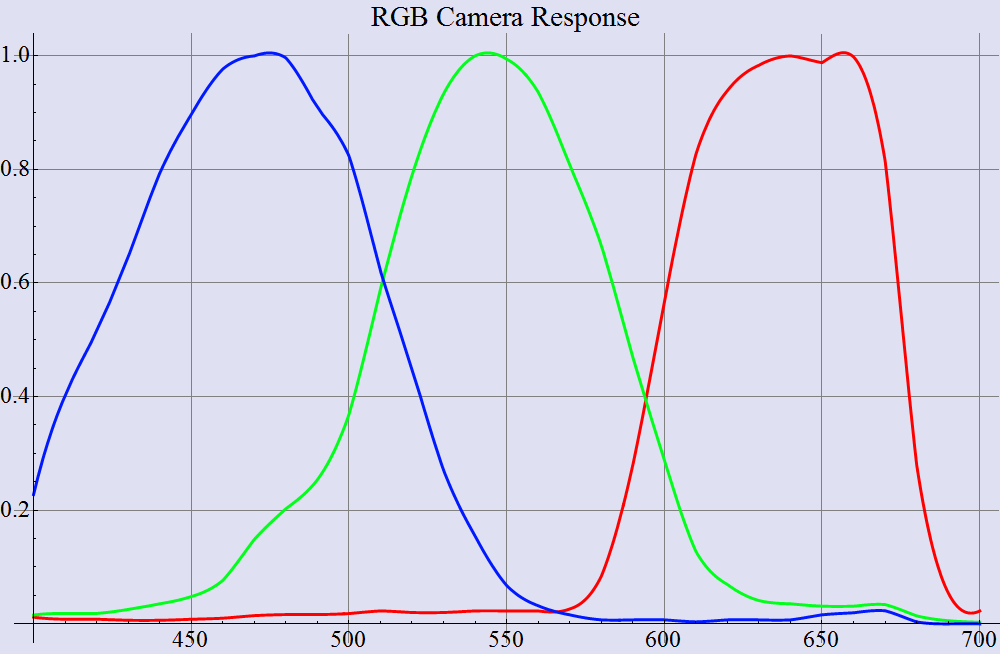

In the image below, I show the spectrum of one particular yellow printing ink. The image also shows the spectral response of the three cones in the human eye, with the L (long), M (medium), and S (short) wavelength cone responses in red, green, and blue, respectively.

The spectrum of yellow ink, along with the sensors in the eye

If I can be so presumptuous as to paraphrase what the yellow graph is saying, we see that above about 530 nm (in the green to red parts of the rainbow), the yellow ink has a pretty high reflectance, somewhere up around 90%. Below 470 nm (in the violet to blue part of the rainbow), the reflectance is pretty darn small.

To continue my presumption, consider what an S cone (the blue plot) is seeing when it is pointed at this ink. It sees light in the wavelength range from 400 nm to 500 nm. This channel is pretty quiet. There isn't just a whole lot of light reflecting from this region of the spectrum.

This darkness is all completely unbeknownst to the L and M cones. In their little view of the electromagnetic spectrum (above 470 nm), the yellow ink looks a whole lot like white. And note that the amount of light seen by the M cones is pretty much the same as the amount of light seen with the L cones

So, that, my friends, is what the spectrum of yellow looks like. By the way, the CIELAB (L*a*b*) value is 94.04, -6.07, 116.18.

Example 2

For my next encore, I show the spectrum of a hypothetical yellow LED. I say hypothetical because this isn't an actual measurement, or even a typical measurement provided by the manufacturer. I started with the spectrum of an amber LED, fit a Lorentzian function to it, and then adjusted the wavelength and width just a little to make it the same color as the yellow ink. The CIELAB value of the hypothetical yellow LED is 94.04, -5.99, 116.19. By fudging the LED a little, I got the color within a tenth of a deltaE.

The spectrum of a yellow LED, along with the sensors in the eye

One thing I should mention about the graph. I scaled the plot of the LED by a factor of about four, just to make it fit with the rest of the plots. In terms of the real real world, I turned up the LED so that in one narrow range of the spectrum it was about four times as bright as the ink, but on the whole, the color -- what the human eye would see -- was nearly identical.

Once again, we see that the amount of light that is seen by the S cone is minimal. The light from the yellow LED is seen in the L and M cones, and in something like equal measure.

So, forget what you learned in that last section. This is the true spectrum of the color yellow. It is a slice out of the yellow part of the rainbow.

Example 3

For my second encore, I will perform the same act of creation of a color that looks indistinguishable from the yellow ink. This time, I will do it with not one, but with the heretofore unimaginable quantity of two LEDS! From my bag of hypothetical LEDs, I draw a red and a green LED, and mix the light emitted from them. I used an ordinary whisk, but you could certainly use a Kitchen Aid. You can plainly see the peak emission of the LEDs at 555 nm and at 640 nm.

The spectrum of a mix of red and green LEDs, along with the sensors in the eye

By a small act of hypotheticalry, I managed to adjust wavelength and peak width of these two LEDs so as to get these two LEDs to emit light with CIELAB value of 94.04, -6.05, 116.55. I will admit that I did not get quite as close to the original color of the yellow ink. I got tired of futzing with the hypothetical parameters of the hypothetical LEDs. But, the colors are still close enough to call a really darn good match. And it really could have been perfect if I wasn't so darn lazy.

Oh... remember that thing I said about scaling the plot in the previous one to protect the innocent? The same holds for this one.

At the risk of repeating myself, I will recount what the cones see when they gaze upon this pair of LEDs. It's the same as before. No S, and about an even amount of L and M.

So, once again forget what you learned in the two previous sections. This bimodal spectrum with two humps is the only real and true spectrum of the color yellow. This Bactrian spectrum is the actual spectrum, and the Dromedary from the previous section is nothing more than a figmentary pigmentary unicorn. And that first spectrum that's as flat as my head? Fahgeddaboudit.

Will the real yellow please stand up?

I hope that I'm not the only one who is confused. I have given three different spectra. And I have claimed in each case that the spectra represents the "correct" version of the spectrum of yellow.

The versions of yellow

My favorite scene from Fiddler on the Roof has Tevye talking in the courtyard with some of his friends. The first guy says that Obama has brought prosperity to the country. Tevye says "Yah, you're right." The second man in the square says that Obama has completely ruined the country. Tevye stroked his beard and once again says "Ahhh... you're right." The third gentleman questions Tevye, "How can they both be right?!?!" To this, Tevye strokes his beard and says "Ahh yes... you're right!"

How can all three of these spectra claim to be yellow? Which one is the true yellow, and which two are the impostors? Fear not. None of them are impostors. They are all spectra of yellow. All three spectra would be perceived by the eye as being yellow, and (to a pretty darn close degree) all three are the same exact shade of yellow.

Consider the silhouette below. Is this woman holding a ball? Could be. Maybe this woman is holding a manhole cover? Could be. Or then again, maybe she is holding a garbage can. That could also be. From this view, we can't tell. The silhouette has projected the three dimensional shape down to two dimensions.

Ball? Manhole cover? Garbage can?

This is happening when we see colors. We like to think that we see the whole spectrum from 400 nm to 700 nm, and we kinda do. I mean, there are no holes in the rainbow, right? But our eye only has three sampling points. Mathematically, we would say that the cones of the eye are performing a projection of an infinite-dimensional vector in spectral-space onto a three dimensional space.

If the whole idea of infinite dimensions is a bit hard to fathom, that's ok. I don't understand it either. Suffice it to say that are eyes don't individually perceive every little slice of the whole spectrum. There is considerable data loss, so there are cases of dissimilar spectra that will look like exactly the same color.

And that's what metamerism is all about. "Yellow" is not a certain spectral curve. Yellow is what we perceive when the S cone has little response, and the L and M cones have high and nearly equal response.